NurdRacer: Difference between revisions

No edit summary |

No edit summary |

||

| (16 intermediate revisions by the same user not shown) | |||

| Line 15: | Line 15: | ||

The competitive robo element comes at a (much) later stage, when deep learning serves to improve the machine's lap times far beyond human capabilities. Starting with phase 1, the main goal is to provide a fun racing experience for the human racer. | The competitive robo element comes at a (much) later stage, when deep learning serves to improve the machine's lap times far beyond human capabilities. Starting with phase 1, the main goal is to provide a fun racing experience for the human racer. | ||

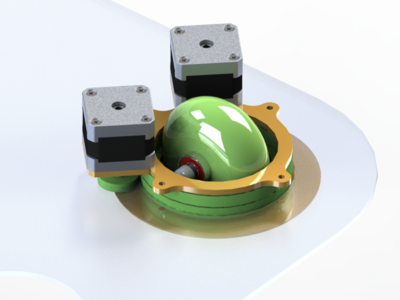

[[File:NurdRacer_steering_WIP.png|400px]] | |||

===== Hardware/features: ===== | ===== Hardware/features: ===== | ||

* 8 steppers . 4 wheels with independent 360 steering and independent drive. | * 8 steppers . 4 wheels with independent 360 steering and independent drive. | ||

* 4 PCBs on wheel units with ATtiny and H | * 4 PCBs on wheel units with ATtiny and H bridges. Each PCB drives 2 motors, reads 2 encoders + 1 accelerometer, and handles low level steering logic. Uses I2C to talk to: | ||

* 1 or more SBCs (RasPi comes to mind) for coms, telemetry, mid level steering/locomotion logic, and more… | * 1 or more SBCs (RasPi comes to mind) for coms, telemetry, mid level steering/locomotion logic, and more… | ||

* WiFi or G4 connecitivity. Or better yet, something open or unregulated. Low latency needed! UDP? Websocket? MQTT? | * WiFi or G4 connecitivity. Or better yet, something open or unregulated. Low latency needed! UDP? Websocket? MQTT? | ||

* A proper stack of batteries. A | * A proper stack of batteries. A charging circuit. China? | ||

===== Later expansion/improvement: ===== | ===== Later expansion/improvement: ===== | ||

* 1 or 2 camera's for optical flow sensing, VR, SLAM | * 1 or 2 camera's for [http://robots.stanford.edu/cs223b05/notes/CS%20223-B%20T1%20stavens_opencv_optical_flow.pdf optical flow] sensing, VR, [https://en.wikipedia.org/wiki/Simultaneous_localization_and_mapping SLAM] | ||

* Proximity sensors (ultrasonic transducers) | * Proximity sensors (ultrasonic transducers) | ||

* GPS | * GPS | ||

* More (GPGPU?) processing power -> more/better batteries | * More (GPGPU?) processing power -> more/better batteries | ||

| Line 34: | Line 35: | ||

* Better motors, wheels | * Better motors, wheels | ||

* Stereo 8K video streaming wirelessly :) | * Stereo 8K video streaming wirelessly :) | ||

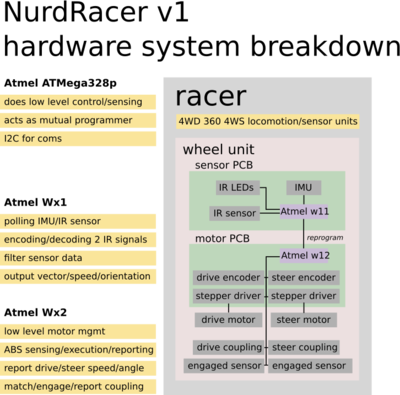

===== Step 1 ===== | |||

[[File:NurdRacer sys diagram v1 step 1.png|400px|And that's just the beginning]] | |||

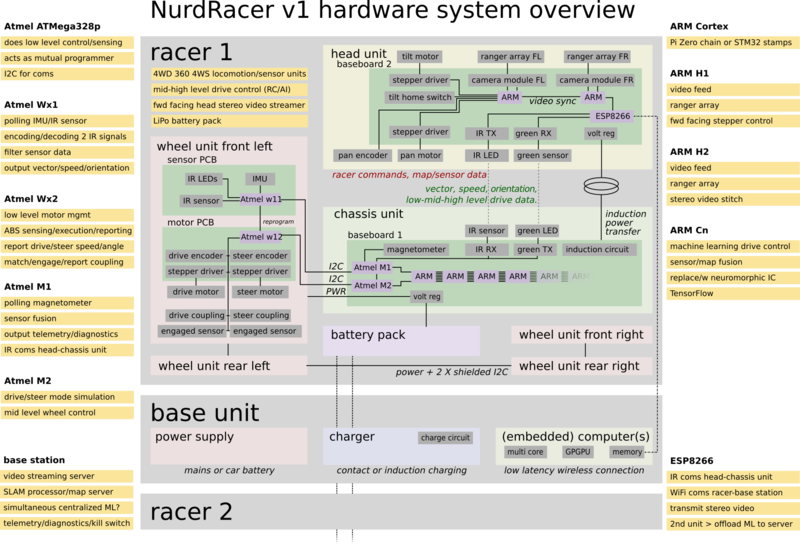

===== Hardware overview ===== | |||

[[File:NurdRacer sys diagram v1 overview.png|800px|Systems overview is subject to change!]] | |||

===== Goals, in order of implementation, affordability and feasability. ===== | ===== Goals, in order of implementation, affordability and feasability. ===== | ||

===== The build ===== | |||

Latest revision as of 18:22, 23 April 2016

| NurdRacer | |

|---|---|

| Participants | |

| Skills | Construction, 3D Printing, Electronics, Coding, Image postprocessing, Machine Learning |

| Status | Planning |

| Niche | Mechanics |

| Purpose | Electronics, fun, world domination |

| Tool | No |

| Location | Space |

| Cost | 100 - 1000 |

| Tool category | |

NurdRacer Property "Tool Image" (as page type) with input value "File:{{{Picture}}}" contains invalid characters or is incomplete and therefore can cause unexpected results during a query or annotation process. {{{Picture}}} {{#if:No | [[Tool Owner::{{{ProjectParticipants}}} | }} {{#if:No | [[Tool Cost::100 - 1000 | }}

Concept:

A bit like the robotic maze racers, but with 360 degree 4 wheel steering / 4 wheel drive that humans couldn't use effectively without assistance from PID systems and other tech you would see in, for example, vectored thrust flight systems.

The competitive robo element comes at a (much) later stage, when deep learning serves to improve the machine's lap times far beyond human capabilities. Starting with phase 1, the main goal is to provide a fun racing experience for the human racer.

Hardware/features:

- 8 steppers . 4 wheels with independent 360 steering and independent drive.

- 4 PCBs on wheel units with ATtiny and H bridges. Each PCB drives 2 motors, reads 2 encoders + 1 accelerometer, and handles low level steering logic. Uses I2C to talk to:

- 1 or more SBCs (RasPi comes to mind) for coms, telemetry, mid level steering/locomotion logic, and more…

- WiFi or G4 connecitivity. Or better yet, something open or unregulated. Low latency needed! UDP? Websocket? MQTT?

- A proper stack of batteries. A charging circuit. China?

Later expansion/improvement:

- 1 or 2 camera's for optical flow sensing, VR, SLAM

- Proximity sensors (ultrasonic transducers)

- GPS

- More (GPGPU?) processing power -> more/better batteries

- Lighter materials

- Better motors, wheels

- Stereo 8K video streaming wirelessly :)

Step 1

Hardware overview