HAIrk: Difference between revisions

Jump to navigation

Jump to search

No edit summary |

No edit summary |

||

| Line 9: | Line 9: | ||

|Location=gpu.vm.nurd.space | |Location=gpu.vm.nurd.space | ||

}} | }} | ||

== What is this == | |||

There's so many fun networks now to run on GPUs! We wanted to share playing with them. | |||

== How does it work == | |||

For now ; http://10.208.30.24:8000/ | For now ; http://10.208.30.24:8000/ | ||

There's a queuing system in place, and soon it'll get hooked up through ghbot , maybe discord(?) and perhaps mediawiki(!!!) | There's a queuing system in place, and soon it'll get hooked up through ghbot , maybe discord(?) and perhaps mediawiki(!!!) | ||

Maybe people can place some cool stuff they made here, and what | # open webpage | ||

# enter a prompt (this is a piece of text describing what you want to see , for example 'a digital artwork of a rake floating in cyberspace with many fragments of keyboards floating around , photorealistic , trending on artstation' | |||

# click 'generate' | |||

# wait ~45 seconds per queue item (you cant see queuesize yet) | |||

# you get a picture \o/ | |||

Stuff to tweak ; | |||

* ' cfg scale ' has some complexity addition to the generated image (see the reddit post somewhere below) | |||

* ' steps ' is amount of iterations the model runs on top of itself to match your prompt, higher takes longer, but most samplers (default we use is KLMS) dont do well with <32 steps , notably k_euler_a DOES get some nice results for ~10 steps already, so if you're in a hurry ... | |||

Another thing you can try is remixing original images with the 'img2img' features. | |||

# open webpage | |||

# enter a prompt for what you want to see the image become (for example 'trump raking the forest') | |||

# next to 'img2img' click on 'choose file' , choose some trump.jpg , preferebly somewhat close to the prompt you want | |||

# lowering 'img2img strength' will make it MORE the original picture (under 0.4 is usually nowhere near any changes, unless you use a high cfg scale?? see tips below) | |||

# click 'generate' | |||

# wait ~45 seconds per queue item (you cant see queuesize yet) | |||

# you get a picture \o/ | |||

Please don't over abuse it :D | |||

== Some tips == | |||

* https://mrdeepfakes.com/forums/threads/guide-using-stable-diffusion-to-generate-custom-nsfw-images.10289/ | |||

Stolen from that site (if you dont wanna click links with 'nsfw' in the url) | |||

* You can drag your favorite result from the output tab on the right back into img2img for further iteration | |||

* The k_euler_a and k_dpm_2_a samplers give vastly different, more intricate results from the same seed & prompt | |||

* Unlike other samplers, k_euler_a can generate high quality results from low steps. Try it with 10-25 instead of 50 | |||

* If you have more Vram but are still forced to use the optimized parameter, you can try --optmized-turbofor a faster experience | |||

* The seed for each generated result is in the output filename if you want to revisit it | |||

* Using the same keywords as a generated image in img2img produces interesting variants | |||

* It's recommended to have your prompts be at least 512 pixels in one dimension, or a 384x384 square at the smallest | |||

* Anything smaller will have heavy artifacting | |||

* 512x512 will always yield the most accurate results as the model was trained at that resolution | |||

* Try Low strength (0.3-0.4) + High CFG in img2img for interesting outputs | |||

* You can use Japanese Unicode characters in prompts | |||

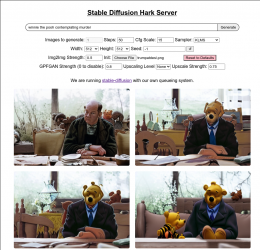

Maybe people can place some cool stuff they made here, and what parameters they used? :) | |||

== Extra links == | |||

* Checkout the GPU stats in [[Grafana]] on https://metrics.nurd.space/d/tAe_RuWVz/erratic-gpu | |||

There are many tutorials for this stuff around, not all as SFW, but still good: | |||

* https://mrdeepfakes.com/forums/threads/guide-using-stable-diffusion-to-generate-custom-nsfw-images.10289/ | |||

* https://www.reddit.com/r/StableDiffusion/comments/wnlsn8/steps_and_cfg_scale_tests/ | |||

* | |||

Revision as of 16:20, 5 September 2022

| hAIrk | |

|---|---|

| Participants | User:buzz, Melan |

| Skills | software, neural networks, gpu |

| Status | Active |

| Niche | Artsy stuff |

| Purpose | Fun |

| Tool | No |

| Location | gpu.vm.nurd.space |

| Cost | |

| Tool category | |

HAIrk.png {{#if:No | [[Tool Owner::{{{ProjectParticipants}}} | }} {{#if:No | [[Tool Cost::{{{Cost}}} | }}

What is this

There's so many fun networks now to run on GPUs! We wanted to share playing with them.

How does it work

For now ; http://10.208.30.24:8000/

There's a queuing system in place, and soon it'll get hooked up through ghbot , maybe discord(?) and perhaps mediawiki(!!!)

- open webpage

- enter a prompt (this is a piece of text describing what you want to see , for example 'a digital artwork of a rake floating in cyberspace with many fragments of keyboards floating around , photorealistic , trending on artstation'

- click 'generate'

- wait ~45 seconds per queue item (you cant see queuesize yet)

- you get a picture \o/

Stuff to tweak ;

- ' cfg scale ' has some complexity addition to the generated image (see the reddit post somewhere below)

- ' steps ' is amount of iterations the model runs on top of itself to match your prompt, higher takes longer, but most samplers (default we use is KLMS) dont do well with <32 steps , notably k_euler_a DOES get some nice results for ~10 steps already, so if you're in a hurry ...

Another thing you can try is remixing original images with the 'img2img' features.

- open webpage

- enter a prompt for what you want to see the image become (for example 'trump raking the forest')

- next to 'img2img' click on 'choose file' , choose some trump.jpg , preferebly somewhat close to the prompt you want

- lowering 'img2img strength' will make it MORE the original picture (under 0.4 is usually nowhere near any changes, unless you use a high cfg scale?? see tips below)

- click 'generate'

- wait ~45 seconds per queue item (you cant see queuesize yet)

- you get a picture \o/

Please don't over abuse it :D

Some tips

Stolen from that site (if you dont wanna click links with 'nsfw' in the url)

- You can drag your favorite result from the output tab on the right back into img2img for further iteration

- The k_euler_a and k_dpm_2_a samplers give vastly different, more intricate results from the same seed & prompt

- Unlike other samplers, k_euler_a can generate high quality results from low steps. Try it with 10-25 instead of 50

- If you have more Vram but are still forced to use the optimized parameter, you can try --optmized-turbofor a faster experience

- The seed for each generated result is in the output filename if you want to revisit it

- Using the same keywords as a generated image in img2img produces interesting variants

- It's recommended to have your prompts be at least 512 pixels in one dimension, or a 384x384 square at the smallest

- Anything smaller will have heavy artifacting

- 512x512 will always yield the most accurate results as the model was trained at that resolution

- Try Low strength (0.3-0.4) + High CFG in img2img for interesting outputs

- You can use Japanese Unicode characters in prompts

Maybe people can place some cool stuff they made here, and what parameters they used? :)

Extra links

- Checkout the GPU stats in Grafana on https://metrics.nurd.space/d/tAe_RuWVz/erratic-gpu

There are many tutorials for this stuff around, not all as SFW, but still good: