NurdRacer

| NurdRacer | |

|---|---|

| Participants | |

| Skills | Construction, 3D Printing, Electronics, Coding, Image postprocessing, Machine Learning |

| Status | Planning |

| Niche | Mechanics |

| Purpose | Electronics, fun, world domination |

| Tool | No |

| Location | Space |

| Cost | 100 - 1000 |

| Tool category | |

NurdRacer Property "Tool Image" (as page type) with input value "File:{{{Picture}}}" contains invalid characters or is incomplete and therefore can cause unexpected results during a query or annotation process. {{{Picture}}} {{#if:No | [[Tool Owner::{{{ProjectParticipants}}} | }} {{#if:No | [[Tool Cost::100 - 1000 | }}

Concept:

A bit like the robotic maze racers, but with 360 degree 4 wheel steering / 4 wheel drive that humans couldn't use effectively without assistance from PID systems and other tech you would see in, for example, vectored thrust flight systems.

The competitive robo element comes at a (much) later stage, when deep learning serves to improve the machine's lap times far beyond human capabilities. Starting with phase 1, the main goal is to provide a fun racing experience for the human racer.

Hardware/features:

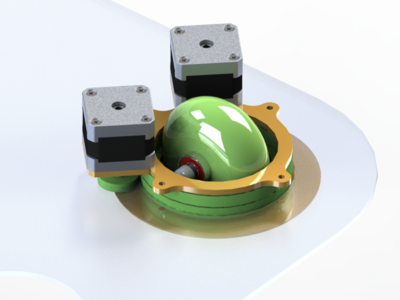

- 8 steppers . 4 wheels with independent 360 steering and independent drive.

- 4 PCBs on wheel units with ATtiny and H bridges. Each PCB drives 2 motors, reads 2 encoders + 1 accelerometer, and handles low level steering logic. Uses I2C to talk to:

- 1 or more SBCs (RasPi comes to mind) for coms, telemetry, mid level steering/locomotion logic, and more…

- WiFi or G4 connecitivity. Or better yet, something open or unregulated. Low latency needed! UDP? Websocket? MQTT?

- A proper stack of batteries. A charging circuit. China?

Later expansion/improvement:

- 1 or 2 camera's for optical flow sensing, VR, SLAM

- Proximity sensors (ultrasonic transducers)

- GPS

- More (GPGPU?) processing power -> more/better batteries

- Lighter materials

- Better motors, wheels

- Stereo 8K video streaming wirelessly :)

Goals, in order of implementation, affordability and feasability.

- Build WiFi controlled RC race car with "normal" 4 wheel steering.

- Install camera module -> control it without line of sight with VR glasses. WiFi AP on racer? Stereo cam, 2 cams x 15 fps. Some trickery to restore 30fps for both? Like mix old luminosity with new chroma meant for other eye transposed slightly, or even interframe morphing?

- Stereo cam, 2 cams x 15 fps. Some trickery to restore 30fps for both? Like mix old luminosity with new chroma meant for other eye transposed, or even interframe morphing?

- Neural net for assisted steering & obstacle avoidance, naive evaluation by looking at achieved speeds over distance (inputs in order of implementation: motor encoder, accelerometers (4!), prox. sensors, GPS and eventually SLAM). Assisted steering will allow for seemingly impossible manouvres.

- Get SLAM to run for, but not on, the NurdRacer. Dump frames to base station in packets, at "quiet" moments. Base station accepts data from multiple sources, redistributes 3D vertex data to NurdRacer(s).

- A separate neural net for completely autonomous steering, navigation and lap improvement with route learning, either through RC manual input or with some recog algorithm to do SLAM on its own the first time, and a human to set waypoints and a start and finish line during or after data acquisition to define the route.

- Upgrade mapping to be humanly acceptable. Visualize competing racers (or ghosts of previous laps) in a virtual environment. Provide camera angles. Output goes where? Entertainment value? Twitch? By serving spectators the virtual perspectives instead of raw camera feed you can inject advertisements or even replace the entire scene, add explosions, debris etc. Use accelerometer data for actuated VR seats or cranial stimulation.

- Multiple autonomous vehicles exchanging SLAM data, updating continuously, acitvely seeking out changes in the environment. A swarm of them mapping and broadcasting an event, while providing internet/intranet with a mesh network, without getting in the way of humans. They can be easily branded, and their mode of locomotion enables them to keep facing the audience while passing them, providing ample time to read a signaling display, for instance.

- Implement a race car with assisted driving and the electric 4 wheel 360 degree steering on go-cart scale, or larger, in order to carry a human. If desired, you could still be in the driver's seat, in the sense that you get to decide if the machine's overtaking your opponent on the left or the right side. There's no gas pedal, just a "go / neutral" and a "stop" button. Perhaps a win/lose switch. G forces should be limited to spare the human. Obstacle avoidance should be so good at this point, that the machine is 100% uncrashable, except maybe by a meteor or a heat seeking missile.