HAIrk: Difference between revisions

No edit summary |

|||

| (8 intermediate revisions by 3 users not shown) | |||

| Line 11: | Line 11: | ||

== What is this == | == What is this == | ||

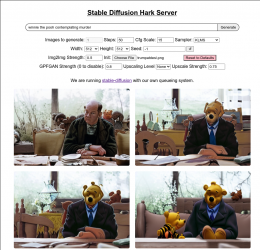

NURDspace goes Stable Diffusion! | |||

Stable Diffusion is a machine learning model developed by Stability.ai to generate digital images from natural language descriptions. | |||

Right now we are running the latest version which is 1.4. | |||

== How does it work == | == How does it work == | ||

For now ; http://10.208.30.24:8000/ | For now ; http://10.208.30.24:8000/ | ||

For Discord, contact Melan to get an invite link for the bot. | |||

There's a queuing system in place, and soon it'll get hooked up through ghbot | There's a queuing system in place, and soon it'll get hooked up through ghbot and perhaps mediawiki(!!!) | ||

# open webpage | # open webpage | ||

# enter a prompt (this is a piece of text describing what you want to see , for example 'a digital artwork of a rake floating in cyberspace with many fragments of keyboards floating around , photorealistic , trending on artstation' | # enter a prompt (this is a piece of text describing what you want to see , for example 'a digital artwork of a rake floating in cyberspace with many fragments of keyboards floating around , photorealistic , trending on artstation' | ||

# click 'generate' | # click 'generate' | ||

# wait ~45 seconds per queue item | # wait ~45 seconds per queue item | ||

# you get a picture \o/ | # you get a picture \o/ | ||

Another thing you can try is remixing original images with the 'img2img' features. | Another thing you can try is remixing original images with the 'img2img' features. | ||

| Line 34: | Line 35: | ||

# enter a prompt for what you want to see the image become (for example 'trump raking the forest') | # enter a prompt for what you want to see the image become (for example 'trump raking the forest') | ||

# next to 'img2img' click on 'choose file' , choose some trump.jpg , preferebly somewhat close to the prompt you want | # next to 'img2img' click on 'choose file' , choose some trump.jpg , preferebly somewhat close to the prompt you want | ||

# click 'generate' | # click 'generate' | ||

# wait ~45 seconds per queue item | # wait ~45 seconds per queue item | ||

# you get a picture \o/ | # you get a picture \o/ | ||

| Line 43: | Line 43: | ||

== Some tips == | == Some tips == | ||

Tips by [[User:Buzz|buZz]] ([[User talk:Buzz|talk]]) | |||

* ' cfg scale ' has some complexity addition to the generated image (see the reddit post somewhere below) | |||

* ' steps ' is amount of iterations the model runs on top of itself to match your prompt, higher takes longer, but most samplers (default we use is KLMS) dont do well with <32 steps , notably k_euler_a DOES get some nice results for ~10 steps already, so if you're in a hurry ... | |||

* lowering ' img2img strength ' will make it MORE the original picture (under 0.4 is usually nowhere near any changes, unless you use a high cfg scale?? see stolentips below) | |||

* you can subdivide topics in your prompt with a comma. for example ('a duck, a pond, a submarine') | |||

Stolen from this site (if you dont wanna click links with 'nsfw' in the url) (i removed the tips about running the model) | |||

* https://mrdeepfakes.com/forums/threads/guide-using-stable-diffusion-to-generate-custom-nsfw-images.10289/ | * https://mrdeepfakes.com/forums/threads/guide-using-stable-diffusion-to-generate-custom-nsfw-images.10289/ | ||

* You can drag your favorite result from the output tab on the right back into img2img for further iteration | * You can drag your favorite result from the output tab on the right back into img2img for further iteration | ||

| Line 58: | Line 65: | ||

* You can use Japanese Unicode characters in prompts | * You can use Japanese Unicode characters in prompts | ||

Maybe people can place some cool stuff they made here, and what parameters they used? :) | == Some good prompt examples == | ||

a digital artwork of a active hackerspace during a rave, many laptops, ambient lighting, fantasy, steampunk, trending on artstation | |||

[[File:SD-ravinghackerspaces.png]] | |||

Maybe people can place some cool stuff they made here, and what prompt and/or parameters they used? :) | |||

== Extra links == | == Extra links == | ||

| Line 68: | Line 85: | ||

* https://mrdeepfakes.com/forums/threads/guide-using-stable-diffusion-to-generate-custom-nsfw-images.10289/ | * https://mrdeepfakes.com/forums/threads/guide-using-stable-diffusion-to-generate-custom-nsfw-images.10289/ | ||

* https://www.reddit.com/r/StableDiffusion/comments/wnlsn8/steps_and_cfg_scale_tests/ | * https://www.reddit.com/r/StableDiffusion/comments/wnlsn8/steps_and_cfg_scale_tests/ | ||

* | * https://strikingloo.github.io/stable-diffusion-vs-dalle-2 | ||

* https://thealgorithmicbridge.substack.com/p/stable-diffusion-is-the-most-important | |||

* | |||

Prompt generators are a thing too : | |||

* https://www.phase.art/ | |||

* https://promptomania.com/ | |||

Textual inversion is a thing now aswell (soon/eventually in our version) : | |||

* https://huggingface.co/spaces/pharma/CLIP-Interrogator | |||

=== GPU === | |||

We are using a Gigabyte P106-100 (Basically a Nvidia GTX1060 6GB trimmed down for mining), which is PCIe forwarded/exposed to a KVM running on [[Erratic]]. | |||

Of course, better would be a higher memory card, so we can run Dall-E mini mega as well (seemingly requires 24GB). Although for just Stable Diffusion a faster more current card, with 8~12GB of ram would be suitable enough as well as we are running a fork that uses less memory. A Geforce 3060 with 12GB of vram is a interesting, not too expensive (~350 euro) upgrade. | |||

<gallery> | |||

GPU_fan.jpg|A Sun Ace 80 fan has been strapped to the GPU | |||

GPU_erratic.jpg|The GPU fitting "snugly" inside Erratic | |||

</gallery> | |||

=== Wanna run your own? === | === Wanna run your own? === | ||

* http://github.com/lstein/stable-diffusion | * http://github.com/lstein/stable-diffusion | ||

* https://github.com/borisdayma/dalle-mini | * https://github.com/borisdayma/dalle-mini | ||

* https://stability.ai | |||

=== Wanna train your own? === | |||

* dataset is 240TB for 384x384 resolution images, https://laion.ai/blog/laion-5b/ | |||

* a search engine for the dataset is here ; https://rom1504.github.io/clip-retrieval/ | |||

=== 24GB cards === | |||

* https://tweakers.net/videokaarten/vergelijken/#filter:PYxNCsIwEIXvMusIrTWp5gCFLlzlBCEddSRtQhJELLm7ExBXj_e9nx1oe2Eq5hGiQY-uUNhA36zPKCCkBdNE6BfQEBM9M_ygCakws9n9SUQ3c-_QC4j2joY-CLrvOtGWDq_Ev2wy9ybyBVMGvcM4XM5N1xbD8SRHxY-rfbNTUg4Kaq1f | |||

== what if it breaks == | |||

* login to nurds@gpu.vm.nurd.space | |||

* screen -S gpu | |||

* under dreamingAPI start run.sh | |||

* under stable-diffusion-webui start webui.sh | |||

Latest revision as of 18:09, 21 December 2022

| hAIrk | |

|---|---|

| Participants | User:buzz, Melan |

| Skills | software, neural networks, gpu |

| Status | Active |

| Niche | Artsy stuff |

| Purpose | Fun |

| Tool | No |

| Location | gpu.vm.nurd.space |

| Cost | |

| Tool category | |

HAIrk.png {{#if:No | [[Tool Owner::{{{ProjectParticipants}}} | }} {{#if:No | [[Tool Cost::{{{Cost}}} | }}

What is this

NURDspace goes Stable Diffusion!

Stable Diffusion is a machine learning model developed by Stability.ai to generate digital images from natural language descriptions.

Right now we are running the latest version which is 1.4.

How does it work

For now ; http://10.208.30.24:8000/ For Discord, contact Melan to get an invite link for the bot.

There's a queuing system in place, and soon it'll get hooked up through ghbot and perhaps mediawiki(!!!)

- open webpage

- enter a prompt (this is a piece of text describing what you want to see , for example 'a digital artwork of a rake floating in cyberspace with many fragments of keyboards floating around , photorealistic , trending on artstation'

- click 'generate'

- wait ~45 seconds per queue item

- you get a picture \o/

Another thing you can try is remixing original images with the 'img2img' features.

- open webpage

- enter a prompt for what you want to see the image become (for example 'trump raking the forest')

- next to 'img2img' click on 'choose file' , choose some trump.jpg , preferebly somewhat close to the prompt you want

- click 'generate'

- wait ~45 seconds per queue item

- you get a picture \o/

Please don't over abuse it :D

Some tips

- ' cfg scale ' has some complexity addition to the generated image (see the reddit post somewhere below)

- ' steps ' is amount of iterations the model runs on top of itself to match your prompt, higher takes longer, but most samplers (default we use is KLMS) dont do well with <32 steps , notably k_euler_a DOES get some nice results for ~10 steps already, so if you're in a hurry ...

- lowering ' img2img strength ' will make it MORE the original picture (under 0.4 is usually nowhere near any changes, unless you use a high cfg scale?? see stolentips below)

- you can subdivide topics in your prompt with a comma. for example ('a duck, a pond, a submarine')

Stolen from this site (if you dont wanna click links with 'nsfw' in the url) (i removed the tips about running the model)

- You can drag your favorite result from the output tab on the right back into img2img for further iteration

- The k_euler_a and k_dpm_2_a samplers give vastly different, more intricate results from the same seed & prompt

- Unlike other samplers, k_euler_a can generate high quality results from low steps. Try it with 10-25 instead of 50

- The seed for each generated result is in the output filename if you want to revisit it

- Using the same keywords as a generated image in img2img produces interesting variants

- It's recommended to have your prompts be at least 512 pixels in one dimension, or a 384x384 square at the smallest

- Anything smaller will have heavy artifacting

- 512x512 will always yield the most accurate results as the model was trained at that resolution

- Try Low strength (0.3-0.4) + High CFG in img2img for interesting outputs

- You can use Japanese Unicode characters in prompts

Some good prompt examples

a digital artwork of a active hackerspace during a rave, many laptops, ambient lighting, fantasy, steampunk, trending on artstation

Maybe people can place some cool stuff they made here, and what prompt and/or parameters they used? :)

Extra links

- Checkout the GPU stats in Grafana on https://metrics.nurd.space/d/tAe_RuWVz/erratic-gpu

There are many tutorials for this stuff around, not all as SFW, but still good:

- https://mrdeepfakes.com/forums/threads/guide-using-stable-diffusion-to-generate-custom-nsfw-images.10289/

- https://www.reddit.com/r/StableDiffusion/comments/wnlsn8/steps_and_cfg_scale_tests/

- https://strikingloo.github.io/stable-diffusion-vs-dalle-2

- https://thealgorithmicbridge.substack.com/p/stable-diffusion-is-the-most-important

Prompt generators are a thing too :

Textual inversion is a thing now aswell (soon/eventually in our version) :

GPU

We are using a Gigabyte P106-100 (Basically a Nvidia GTX1060 6GB trimmed down for mining), which is PCIe forwarded/exposed to a KVM running on Erratic.

Of course, better would be a higher memory card, so we can run Dall-E mini mega as well (seemingly requires 24GB). Although for just Stable Diffusion a faster more current card, with 8~12GB of ram would be suitable enough as well as we are running a fork that uses less memory. A Geforce 3060 with 12GB of vram is a interesting, not too expensive (~350 euro) upgrade.

Wanna run your own?

Wanna train your own?

- dataset is 240TB for 384x384 resolution images, https://laion.ai/blog/laion-5b/

- a search engine for the dataset is here ; https://rom1504.github.io/clip-retrieval/

24GB cards

what if it breaks

- login to nurds@gpu.vm.nurd.space

- screen -S gpu

- under dreamingAPI start run.sh

- under stable-diffusion-webui start webui.sh